9 Lines of Code to Talk to an Open Source LLM

Jul 30, 2024

Many people are extremely conversant in AI, but still haven't typed that first command to interact with a real AI model.

Let's change that in a single blog post.

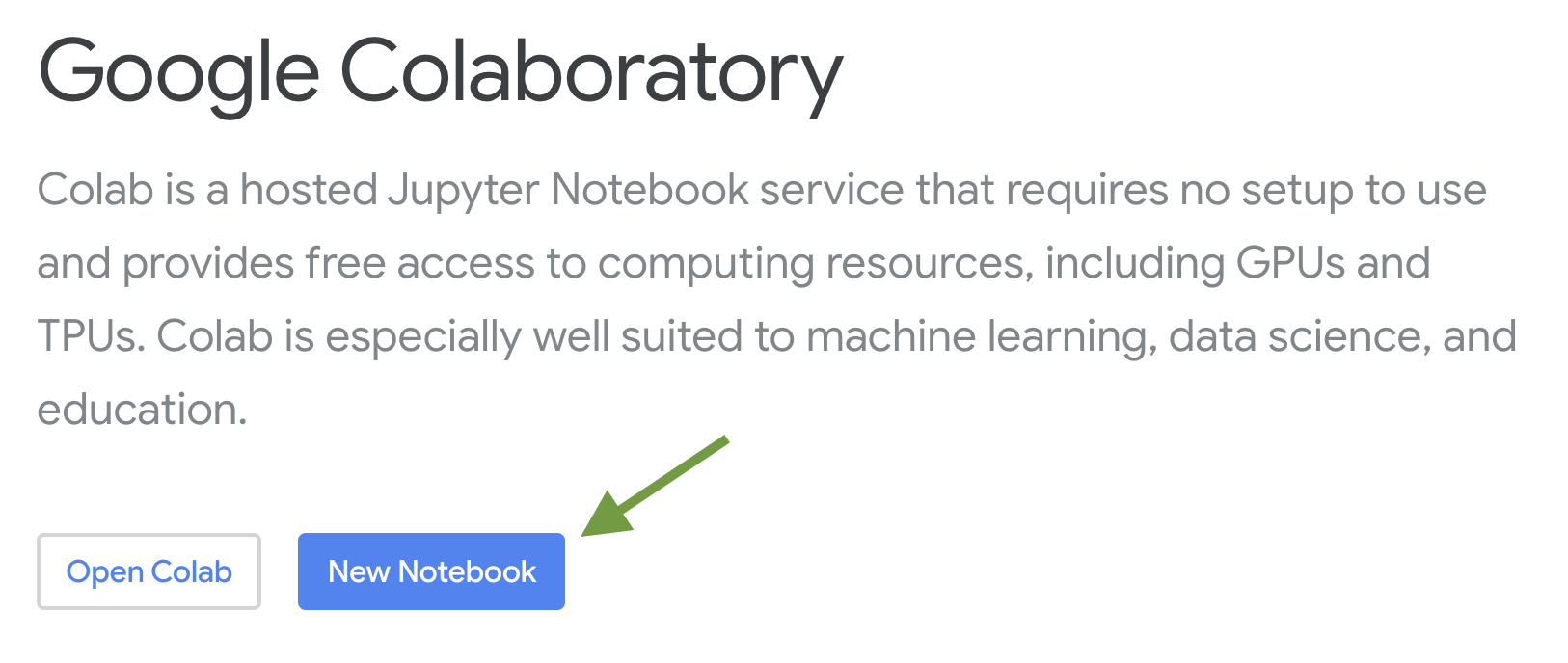

The first technology you need is called a Jupytr Notebook. There are lots of online hosted versions available, and you can also run them on your own machine. But since pretty much everyone has a Gmail account these days, let's go with Google's free version called Colab Notebooks. Here's the link:

Once you're there, click "New Notebook". You'll need to sign in with your Google account if you're not already signed in.

Jupytr notebooks are a playground for trying out Python commands without having to build a bunch of complex software infrastructure around them. They're used for all kinds of tasks, not just AI and Machine Learning (I used them in a class on Quantum Computing for example).

Let's Make Something Appear

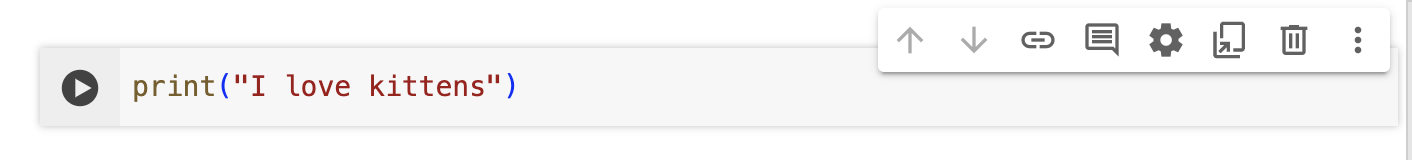

First, enter the following code:

print("I love kittens")

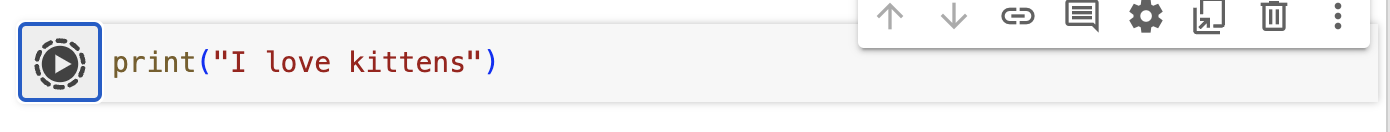

Then click the "play" button to the left.

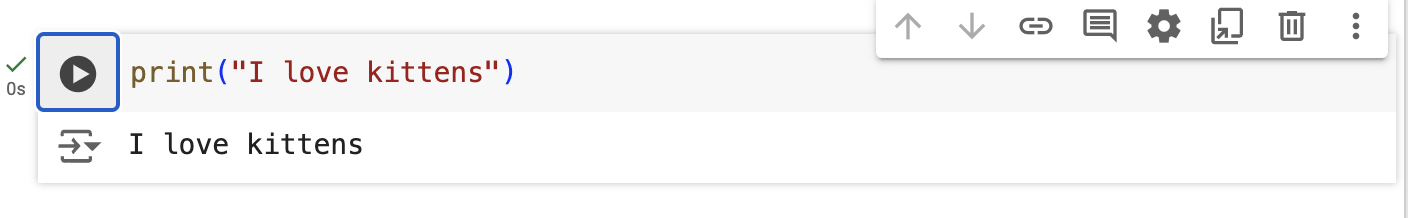

The first command will take a moment to connect to the Google compute engine behind the notebook. Once it's done you'll see a green check mark, an indicator of how much time elapsed, and the output of your command below the code.

That's it! Now let's swap out the silly print statement for something that calls a real AI model.

Call a Real AI Model

Hover over the area below the first box and you'll see two buttons appear: "+ Code" and "+ Text".

Click on "+ Code".

Input the following code:

!pip install transformers torch

Click the play button. You'll have to wait a bit because the notebook is downloading some big models in the background. It took about 1 minute for mine to finish running.

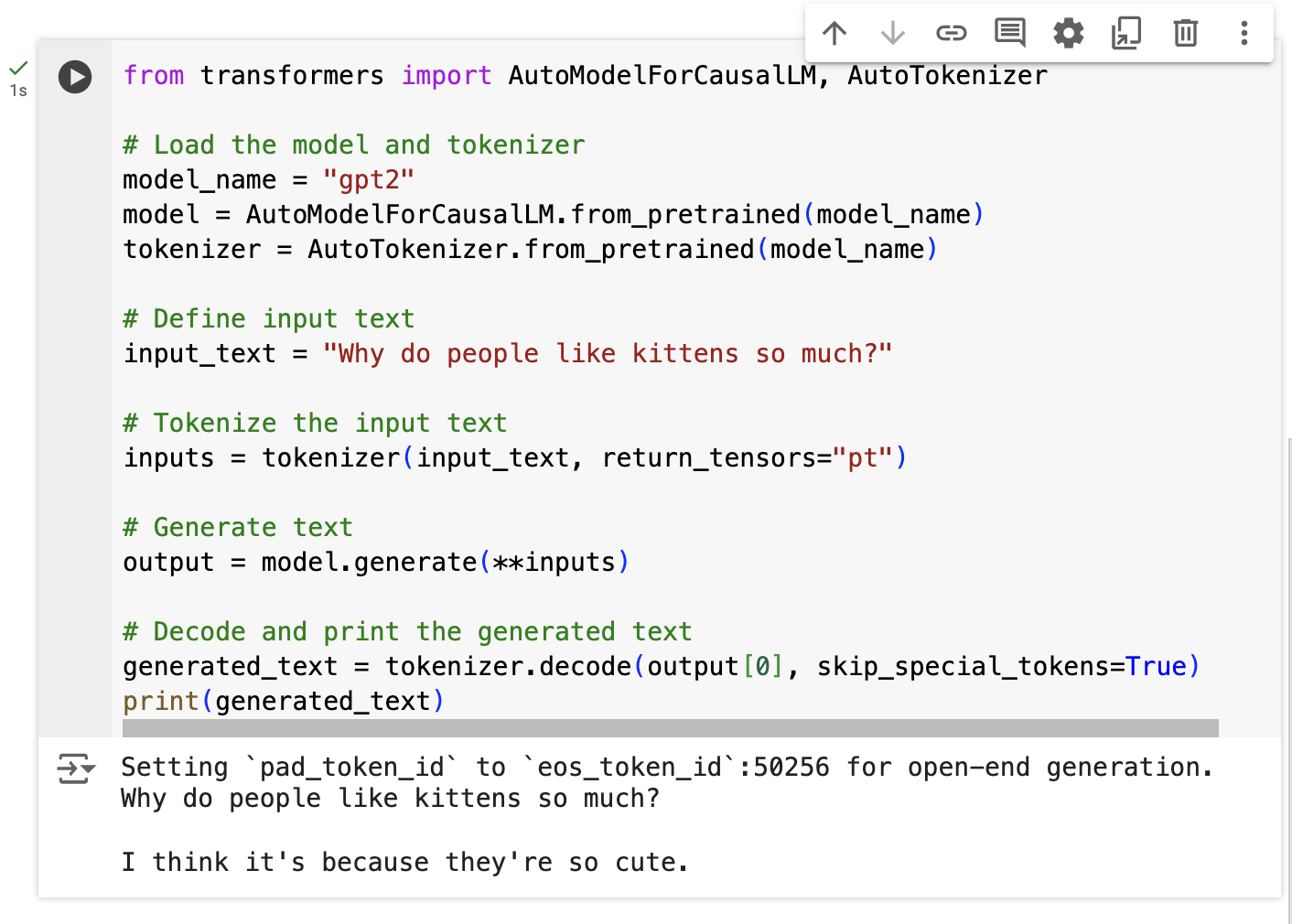

Create another code block like you just did. In the new code block, type the following and click the play button. You may get asked about access to an "HF_TOKEN" environment variable, but you can ignore this.

from transformers import AutoModelForCausalLM, AutoTokenizer

# Load the model and tokenizer

model_name = "gpt2"

model = AutoModelForCausalLM.from_pretrained(model_name)

tokenizer = AutoTokenizer.from_pretrained(model_name)

# Ask a silly question

input_text = "Why do people like kittens so much?"

# Tokenize the input text

inputs = tokenizer(input_text, return_tensors="pt")

# Generate text

output = model.generate(**inputs)

# Decode and print the generated text

generated_text = tokenizer.decode(output[0], skip_special_tokens=True)

print(generated_text)Here's the output I got:

Congratulations!

You have now interacted with a real open source LLM using code.

Play around with some other inputs. The AI field, as rooted in math as it is, is much more about experimenting than traditional coding. You'll learn the most by experimenting.